Artificial intelligence (AI) relies on sophisticated algorithms but the sophisticated algorithms are themselves built on the mathematics, specifically linear algebra. This blog tackles the core concepts of vectors, matrices, and basic linear algebra operations, making them accessible for anyone curious about the math behind AI.

Vectors and Matrices

Imagine a grocery list with various items and their corresponding quantities. We can translate this into a vector, a one-dimensional array of numbers. In AI, vectors often represent data points, where each element corresponds to a specific feature. For instance, a vector describing an image might include pixel intensities at different locations.

Definition. A k-dimensional vector y is an ordered collection of k real numbers y1 , y2 , . . . , yk , and is written as y = (y1 , y2 , . . . , yk ). The numbers y j ( j = 1, 2, . . . , k) are called the components of the vector y.

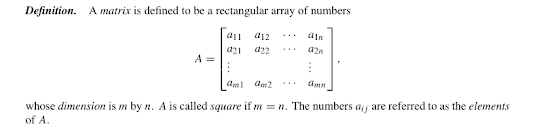

Now, imagine a collection of grocery lists, each representing a different shopping trip. To organize these, we can use a matrix, a two-dimensional array of numbers where rows represent individual lists and columns represent specific items. Matrices are the workhorses of linear algebra, allowing us to represent and manipulate complex data sets efficiently.

Operations that Make Magic Happen

Linear algebra offers a powerful toolkit for manipulating vectors and matrices. Let's delve into some fundamental operations:

- Addition and Subtraction: Vectors and matrices of the same dimensions can be added or subtracted element-wise. Imagine adding two grocery lists – the resulting vector would have the sum of quantities for each item.

- Scalar Multiplication: A scalar (a single number) can be multiplied by a vector or matrix. This scales each element of the vector or matrix by the scalar value. Think of multiplying your grocery list by 2 – you'd double the quantity of each item.

Problem 1: Scaling an Image

Let's say you have a vector representing an image's pixel values (0 to 255 for intensity). To make the image brighter, you can multiply this vector by a scalar greater than 1 (e.g., 1.2). This increases the intensity of each pixel, effectively brightening the image.

- Vector Dot Product: This operation calculates a single number representing the magnitude of the projection of one vector onto another. Imagine two grocery lists representing dietary needs – the dot product tells you how well these lists align in terms of nutritional content.

Problem 2: Recommendation Systems

Recommendation systems, like those used by streaming services, often rely on the dot product. These systems represent user profiles and movie descriptions as vectors. The dot product between these vectors indicates how well a movie aligns with a user's preferences, helping recommend movies they might enjoy.

- Matrix Multiplication: This operation combines two matrices to produce a new one. It involves a specific pattern of element-wise multiplication and summation. While seemingly complex, matrix multiplication is a cornerstone of AI algorithms like neural networks.

- Two matrices A and B are said to be equal, written A = B, if they have the same dimension and their corresponding elements are equal, i.e., ai j = bi j for all i and j.

Problem 3: Image Classification

Convolutional Neural Networks (CNNs), a type of neural network used for image recognition, heavily rely on matrix multiplication. Here, image data is represented as matrices, and the network performs a series of matrix multiplications to extract features and ultimately classify the image content.

Python: Vectors [Use online compiler]

import numpy as np

lst = [10,20,30,40,50]

vctr1 = np.array(lst)

vctr2 = 2*np.array(lst)

print("Vector created from a list:")

print(vctr1)

print(vctr2)

vctr_add = vctr1+vctr2

print("Addition of two vectors: ",vctr_add)

vctr_sub = vctr1-vctr2

print("Subtraction of two vectors: ",vctr_sub)

vctr_mul = vctr1*vctr2

print("Multiplication of two vectors: ",vctr_mul)

vctr_div = vctr1/vctr2

print("Division of two vectors: ",vctr_div)

vctr_dot = vctr1.dot(vctr2)

print("Dot product of two vectors: ",vctr_dot)Output:

Vector created from a list:

[10 20 30 40 50]

[ 20 40 60 80 100]

Addition of two vectors: [ 30 60 90 120 150]

Subtraction of two vectors: [-10 -20 -30 -40 -50]

Multiplication of two vectors: [ 200 800 1800 3200 5000]

Division of two vectors: [0.5 0.5 0.5 0.5 0.5]

Dot product of two vectors: 11000

=== Code Execution Successful ===

import numpy as npA = [[1, 4, 5, 12],[-5, 8, 9, 0],[-6, 7, 11, 19]]print("A =", A)print("A[1] =", A[1]) # 2nd rowprint("A[1][2] =", A[1][2]) # 3rd element of 2nd rowprint("A[0][-1] =", A[0][-1]) # Last element of 1st Rowcolumn = []; # empty listfor row in A:column.append(row[2])print("3rd column =", column)A = np.array([[2, 4], [5, -6]])B = A.transpose()print("A",A)print("Transpose of A",B)C = A + B # element wise additionprint("Sum of A & B = " ,C)C = A - Bprint("Subtraction of A & B = " ,C)C = A * B # element wise additionprint("Mulitplication of A & B = " ,C)C = A / B # element wise additionprint("Div of A & B = " ,C)

A = [[1, 4, 5, 12], [-5, 8, 9, 0], [-6, 7, 11, 19]]A[1] = [-5, 8, 9, 0]A[1][2] = 9A[0][-1] = 123rd column = [5, 9, 11]A [[ 2 4][ 5 -6]]Transpose of A [[ 2 5][ 4 -6]]Sum of A & B = [[ 4 9][ 9 -12]]Subtraction of A & B = [[ 0 -1][ 1 0]]Mulitplication of A & B = [[ 4 20][20 36]]Div of A & B = [[1. 0.8 ][1.25 1. ]]=== Code Execution Successful ===

While these are just a few fundamental operations, linear algebra offers a broader spectrum of tools for AI applications. Techniques like:

- Eigenvectors and Eigenvalues: These concepts help identify patterns and reduce dimensionality in data, which is crucial for handling large datasets used in AI.

- Linear Regression: This method allows us to model relationships between data points, often used for tasks like predicting stock prices or analyzing customer behavior.

The Future: A Symbiotic Relationship

The relationship between AI and linear algebra is a two-way street. As AI continues to evolve, it pushes the boundaries of linear algebra, requiring new methods for efficient data manipulation. Conversely, advancements in linear algebra provide powerful tools for developing innovative AI algorithms.

This journey through vectors, matrices, and basic linear algebra operations has hopefully demystified some of the mathematical foundations of AI. With a solid understanding of these concepts, you can gain a deeper appreciation for the sophistication and ingenuity behind AI technologies shaping our world.

Remember, this is just the beginning of an exciting exploration. By embracing the power of mathematics, we can unlock the full potential of AI and create a future fueled by both human ingenuity and machine intelligence.

Further Reading:

1. Quantstart 1 and Quantstart 2

2. Dot Product

3. Convolutional Neural Networks

![{\displaystyle {\begin{aligned}\ [1,3,-5]\cdot [4,-2,-1]&=(1\times 4)+(3\times -2)+(-5\times -1)\\&=4-6+5\\&=3\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a6f1f0d7669d35eb1220c3256ea458319c80f713)

No comments:

Post a Comment